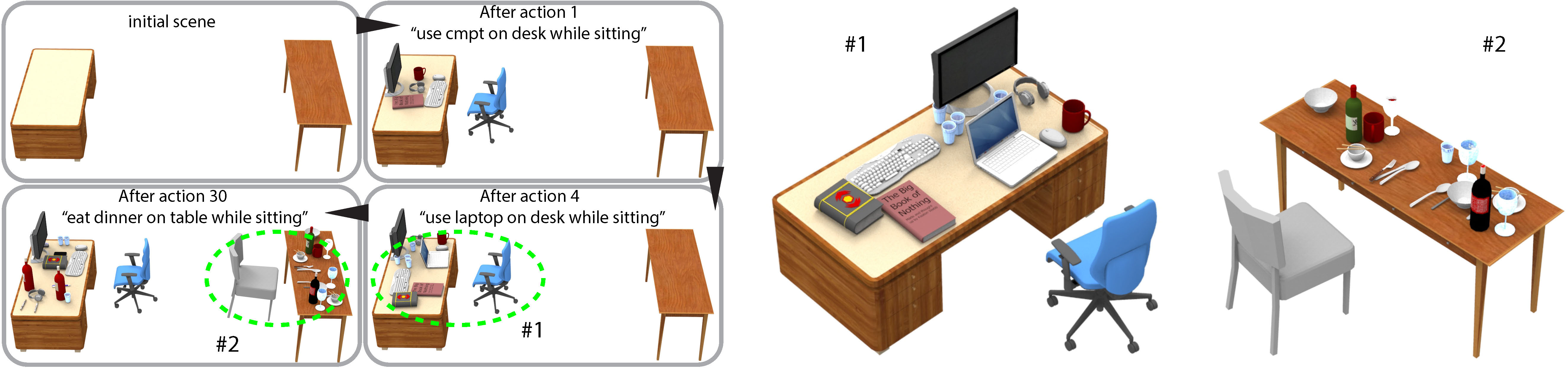

We introduce a framework for action-driven evolution of 3D indoor scenes, where the goal is to simulate how scenes are ''messed up'' by human actions, and specifically, by object placements necessitated by the actions. To this end, we develop an action model with each type of action combining information about one or more human poses, one or more object categories, and spatial configurations of objects belonging to these categories which summarize the object-object and object-human relations for the action; all these pieces of information are learned from annotated photos. Correlations between the learned actions are analyzed and guide the construction of an action graph. Starting with an initial 3D scene, we probabilistically sample sequences of actions extracted from the action graph to drive progressive scene evolution. Each action applied triggers appropriate object placements, based on object co-occurrences and spatial configurations learned for the action model. We show results of our scene evolution, leading to realistic and messy 3D scenes. Evaluations include user studies which compare our method to manual scene creation and state-of-the-art, data-driven methods, in terms of scene plausibility and naturalness.

We would like to thank all the reviewers for their comments and suggestions. We are grateful to Matthew Fisher for his help in providing data and results during our evaluation. Thanks also go to Ashutosh Saxena for off-line discussions on related works. The first author carried out the earlier phase of the research work at Microsoft Research Asia with their support and a MITACS Globalink Research Award. This work was also supported in part by NSERC (611370), NSFC (61502153,61602406), and NSF of Hunan Province of China (2016JJ3031). We also thank Jaime Vargas for the video voice.